Last week, Microsoft announced its commitment to adopt Google’s new Agent2Agent (A2A) interoperability standards. This commitment comes at an interesting time as the market is being flooded with new agents from software vendors everywhere, including copilots, app companions, assistants, bots, and more. As organizations look to adopt agentic AI solutions at scale, a new challenge is emerging for customers: how are all these agents going to work together?

Without common standards, each agent remains locked in its own vendor ecosystem, limiting collaboration and creating silos. “As agents take on more sophisticated roles, they need access not only to diverse models and tools but also to one another,” Microsoft noted in its blog post. “The best agents won’t live in one app or cloud; they’ll operate in the flow of work, spanning models, domains and ecosystems.”

That’s what makes the emergence of standards like the new A2A (Agent2Agent) protocol and MCP (Multimodal Communication Protocol) particularly exciting. With backing from heavy hitters like Microsoft, Google, SAP, Oracle, and Salesforce, these efforts aim to define clear rules for how agents can discover each other, communicate, and collaborate regardless of who built them or where they run.

In this post, we’ll unpack what these standards mean, why they matter, and how they’re positioned to rewire the enterprise for the next era of AI-driven work.

The Role of Open Protocols in Agentic AI Adoption

The rise of agentic AI is beginning to echo a familiar story from the early days of the web. Back then, it wasn’t just the idea of a global information network that changed everything, it was the emergence of shared protocols like HTTP, SSL, HTML, JavaScript, and CSS that provided the foundation for explosive growth. These standards gave developers a common language, ensured interoperability between systems, and allowed vendors to focus their energy on building differentiated experiences rather than reinventing the plumbing.

Agentic AI now seems to be approaching a similar tipping point. As more and more software vendors go to market with agent solutions, there's a huge need for standardization. Without open protocols, we're headed towards a heavily fragmented landscape of siloed agents that don’t speak the same language and can’t work together effectively. Fortunately, software vendors seem to be coming to terms with the fact that success in the agentic world doesn't have to be a zero-sum game and that interoperability is the key to unlocking the full potential of this technology.

With that in mind, let's look at both of these new protocols and see how these emerging standards are defining the rules for how agents can discover each other, negotiate tasks, exchange context, and collaborate.

Creating a Network of Agents with the A2A Protocol

The A2A protocol defines rules for how agents discover, communicate, and collaborate with one another. The protocol is built on a few foundational principles:

Decentralized Discovery: Agents should be able to locate and identify each other across domains without relying on a central registry. This opens the door to dynamic, ad hoc collaboration between agents in different systems or even organizations. Agents can advertise their capabilities using JSON-based "agent cards” (think business cards).

Cross-Vendor Compatibility: A2A isn’t tied to a single platform or provider. Instead, it defines open interfaces and messaging schemas that any agent can implement, enabling a more inclusive, vendor-neutral ecosystem.

Structured, Machine-Readable Interactions: Communication between agents is handled through well-defined protocols and schemas, making interactions reliable, interpretable, and auditable. Building on standards like HTTP, SSE, and JSON-RPC, the protocol fits naturally into existing enterprise IT stacks.

Support for Multimodal Input and Output: A2A is designed for a world where agents can work across voice, text, image, and even video. This enables richer, more natural interactions in human-agent and agent-agent scenarios.

The animation below illustrates how these concepts come together in a practical integration scenario. For a more detailed walkthrough, check out this demonstration video from Google.

Figure 1: Understanding the Mechanics of the A2A Protocol

Connecting Agents to Knowledge Sources and Business Systems

Whereas the A2A protocol defines the rules for agent collaboration, the Model Context Protocol (MCP) defines a standard for enabling AI models and agents to discover and integrate with external data sources and tools. It focuses on the what and how of contextual understanding. In other words, it defines the protocol rules for how agents obtain relevant data, interpret it, and apply it to real-world tasks.

You can kind of think of it like the USB standard. Back in the day, connecting a printer or external drive meant dealing with all sorts of cables, ports, and drivers because every device came with its own set of setup instructions. Then USB came along and made it simple: one universal way to plug things in and just have them work. MCP is the agentic equivalent of bringing that same simplicity to intelligent agents. It gives them a standardized, low-friction way to “plug into” enterprise systems, APIs, and databases.

Figure 2: Understanding the Mechanics of the MCP Protocol

One of MCP’s most powerful attributes is its backwards compatibility. Because it’s built on common web and messaging protocols like HTTP and JSON it can be supported by virtually any system, even legacy platforms that were never designed with AI in mind. That means older ERPs, mainframes, and proprietary business apps can participate in agentic workflows without a complete overhaul. If a system can expose an endpoint or respond to a webhook, it can be part of an MCP-enabled ecosystem.

This opens the door to AI-powered automation in places where it's historically been too expensive or complex to integrate. With MCP, we can innovate around the edges while keeping your core/legacy systems untouched.

MCP also facilitates the design of modular, composable agents. Because the protocol abstracts how agents acquire and consume context, developers can build reusable agents that adapt to different data sources and environments without having to rewrite core logic. This accelerates development, encourages reuse, and simplifies governance since context flows are handled in a standardized, inspectable way. The video below illustrates how these concepts work using Microsoft Copilot Studio and GitHub.

Where We're Headed With All This

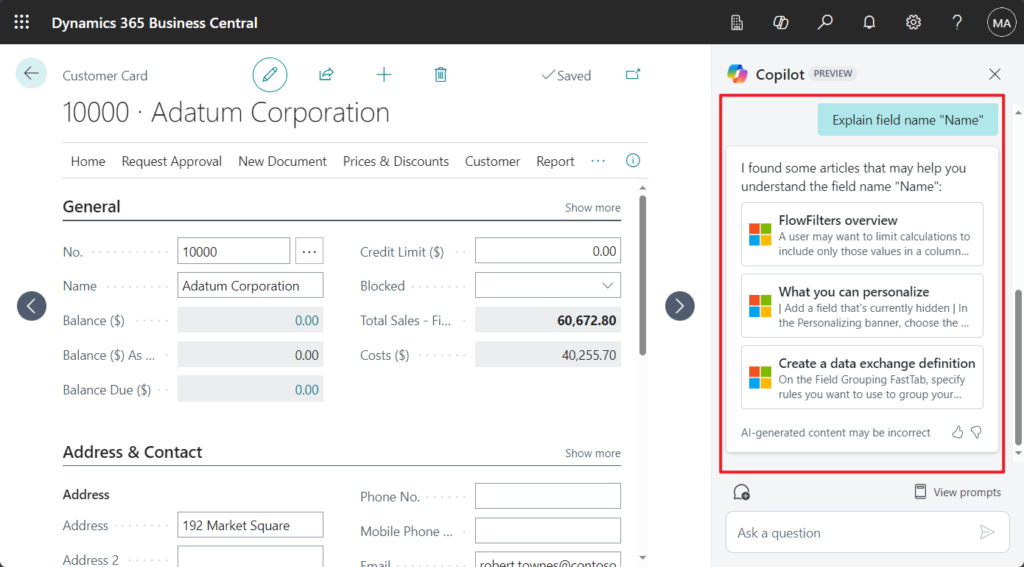

While it's still somewhat early days in the agentic AI race, the emergence of standards like A2A and MCP is providing vendors and customers alike with a clear(er) vision of where we're headed. Today, agents/copilots are something of a novelty, tucked off to the side of traditional form-based apps where the "real work" is being done.

Figure 3: Working with Copilot in Dynamics 365 Business Central

However, in the not-too-distant future, we expect this trend to reverse entirely—so much so that agents will become the main attraction, and the form-based apps will be the ones pushed to the margins. In this new paradigm, users will engage primarily through conversational interfaces, with forms and fields stepping in only when absolutely necessary. We touched on these concepts at length in a previous article.

However, as more and more vendors fully embrace the A2A and MCP protocols, we see an even bigger trend emerging. Instead of having to navigate between lots of vendor-specific agents and copilots, we expect users to be able to work with a more centralized "super agent" that routes incoming requests to the appropriate agent—sort of like a switchboard operator.

Figure 4: Super Agents as the Switchboard Operators for Agentic AI

Much like smartphone desktops and other superapps before them, these super agents will provide users with a next-generation portal that's intelligent, adaptive, and deeply integrated. But more importantly, they’ll finally deliver on the long-standing promise of enterprise portals: a simple, user-friendly interface that brings everything together in one place. Instead of hunting through apps, menus, or intranet pages, users will be able to ask, delegate, or explore with the super agent guiding them directly to the information, tools, or actions they need.

The Emergence of a New Intelligence Layer

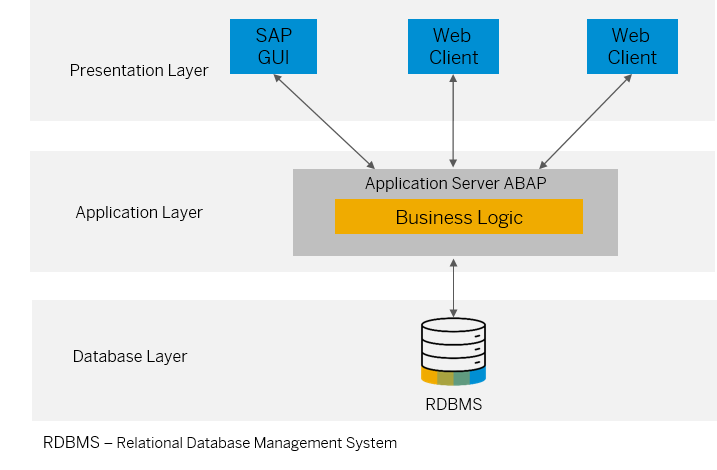

For the past 40 years, the n-tier architecture has been the blueprint for enterprise software applications. From client-server to web-based systems, this layered model—typically divided into presentation, application, and data tiers—has given developers a reliable blueprint for building scalable business applications. But as the digital landscape evolves, a new intelligence layer is starting to take shape and it could fundamentally reshape how we interact with enterprise systems. This new layer will be powered by intelligent agents.

Figure 5: Example of the Classic N-Tier Architecture for Business Applications

This emerging intelligence layer introduces a radically different capability: one that blends user interface and decision support into a conversational, context-aware experience. It’s not just about surfacing data; it’s about interpreting, acting, and collaborating in real time.

Industry leaders have recognized this shift as well. Satya Nadella, CEO of Microsoft, has emphasized that AI agents are becoming the new user interface, transforming how users engage with systems. Similarly, Bill McDermott, CEO of ServiceNow, has articulated a vision where traditional application stacks give way to AI-driven platforms. He stated, “21st century problems cannot be solved with 20th century architectures,” suggesting that legacy systems will serve as core databases feeding into (agentic) platforms where "the real work is happening".

Of course, there’s still architectural work to be done. Standards like A2A and MCP are just now being defined, and questions around orchestration, trust, and performance are still in flux. But the potential is clear: this intelligence layer could be the missing link that enterprise architects have been searching for—a way to evolve ERP, CRM, and other core systems from highly expensive systems of record into decision support systems that help people work smarter, faster, and more strategically.

Closing Thoughts

The enterprise software landscape is undergoing a profound transformation right now. As open standards like A2A and MCP begin to take hold, the foundation is being laid for a truly interoperable agent ecosystem. In this ecosystem, software applications will no longer operate in isolated silos, but work together as a coordinated intelligence layer on top of our existing systems.

While we're still early in this shift, the implications are significant. Enterprise architects now have a real opportunity to reimagine how work gets done. What used to be aspirational ideas about "smart systems" and "digital assistants" are quickly becoming practical tools that can be deployed and scaled. We'll have a lot more to say on these topics in the weeks and months ahead, so stay tuned.